Docker – JMeter Integration

Docker is an open-source platform with the goal of simplifying and optimizing the software lifecycle. Docker provides virtualization services that ease the replication of the working environment. Moreover, it grants deployment solutions with modular and scalable services. Finally, every virtualized service is isolated from other unrelated services on other containers or on the host machine, to ensure portability across host machines and the network. The Docker approach to virtualization can provide advantages when testing applications with Apache JMeter. Docker – JMeter Integration makes this possible. By making it replicable, Docker enables sharing the JMeter tests between users and replicating the test environment. This can be helpful for sharing knowledge between users of different levels, for saving time as not every user needs to set up JMeter tests, and also for ensuring the testing environment doesn’t affect their other work.

Docker is based on the following components and ideas:

- Docker image – the description of the service to be virtualized. The image is almost always used as a starting point for starting containers. A Docker image is a sort of static and optimized file system used by the Docker engine when a container starts. The information carried by the image file system is used only at container boot time and normally no modification occurs during container execution.

- Docker container – the element that runs the virtualized service. It takes the image as a starting point and executes the service to be exported (e.g. a JMeter instance that runs a test script). The Docker image is often described as a screenshot of the container.

A container includes just the application to be virtualized and its dependencies, simulating an operating system. The container is executed in isolation from the other processes in the host operating system. The single container shares the host operating system kernel with other processes and containers.

- Dockerfile – a text document with commands necessary to assemble an image. When using Docker it’s not mandatory to handle a Dockerfile, because you can use the image directly if you have one.

- Kernel sharing – the basic concept of how the Docker container works. Many containers share the kernel of the host machine. This is an important difference compared to classic virtualization approach (e.g. VirtualBox) where each virtual machine has its own complete kernel virtualized.

- Resource usage – not only is the kernel is shared, but the host machine resources (e.g. CPU, Memory, Disk space) are shared as well. Docker can split host machine resources among containers in a manner that each one can perform their scopes independently (e.g. web server goes online, JMeter performs test, etc.).

- Docker engine – a sort of minimalistic operating system where virtualized applications are executed. Running applications within this minimalistic operating system is what makes up the Docker container.

Docker works in two phases:

1. Composing the environment to be virtualized, described by a minimal set of information (e.g. which Linux OS, installed shell, JMeter version, etc). At the end of this step, we get the Docker image, which describes the starting point of next step.

2. Starting from the image, Docker can begin execution by creating a Docker container, which is the virtual environment with the information defined in the previous step. Here the business logic processes run and produce results (e.g. a web application will offer its front-end, a JMeter instance can execute a performance test, etc.).

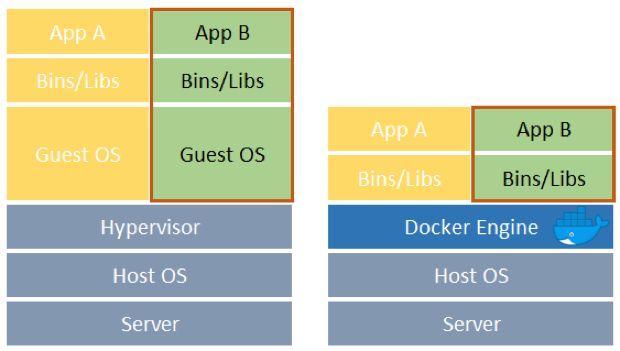

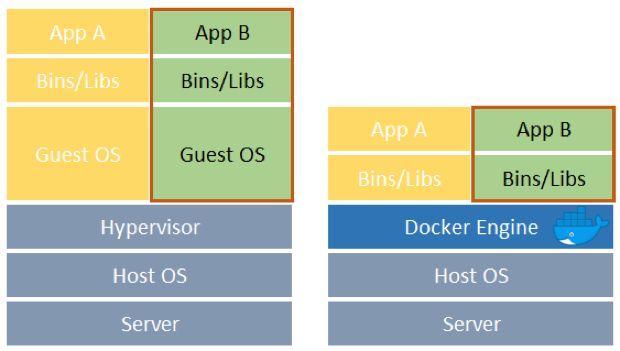

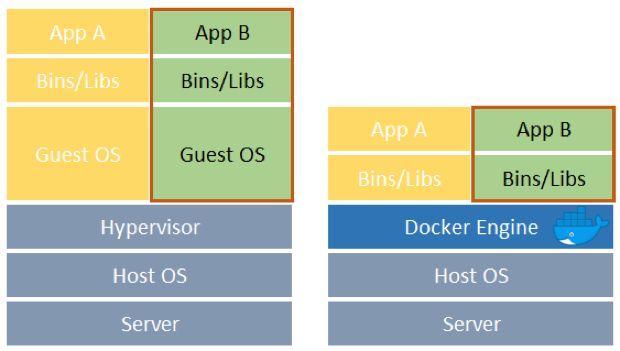

Docker is based on different layers of abstraction compared to the classic virtualization concept.

The left picture describes the classic virtualization stack (e.g. VirtualBox, VMware):

- Starting with the lower layer that includes hardware, HostOS, and a virtualization hypervisor.

- On top there are many GuestOS with necessary libraries and “finally” the requested applications to be exported.

The right picture describes the Docker virtualization stack based on containers:

- Starting with the lower layer that includes hardware, HostOS and the Docker engine.

- On top there are many containers that bring only the necessary libraries and binaries to export to requested applications.

Comparing the two stacks, it is clear that the Docker approach to virtualization reduces resource occupation for the CPU, Memory, and File System. This saving is obtained because the Docker stack avoids recreating many Guest OS with unnecessary duplications and avoids emulating the complete OS (e.g. standard inputs like the keyboard and mouse and standard outputs like the screen).

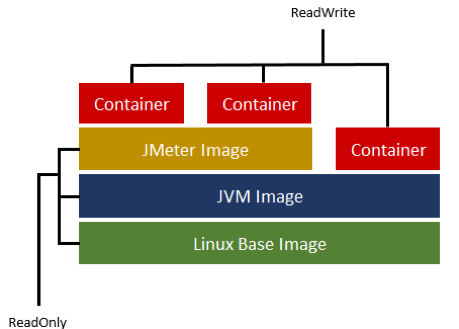

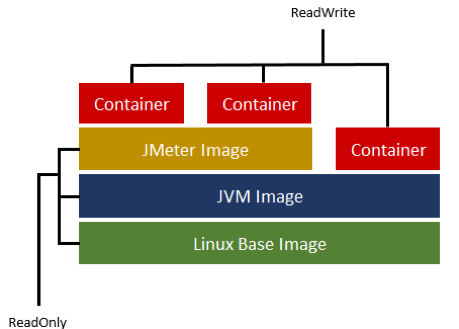

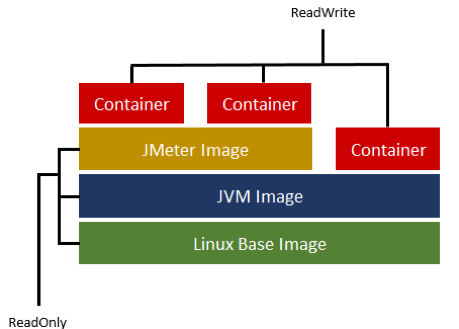

In Docker, the image structure is based on a copy-on-write filesystem that simplifies the tracking of modification from other images (e.g. a base Linux image is the starting point for installing a Java virtual machine that is the starting point for installing a JMeter application). The final result can be portrayed as a version control wall where each brick is an image snapshot on top of another brick.

Another important feature related to Dockers images is the Docker Hub, which allows sharing built images with other Docker users. This infrastructure provides the possibility to reuse an image, apply modifications and share new resulting images.